dropping testing feauture ml svm|svms scale : manufacturer from sklearn import svm svm = svm.SVC(gamma=0.001, C=100., kernel = 'linear') and implement the plot as follows: . Resultado da 24 de jan. de 2024 · V‑Modules are well suited for education, academic research, proof of concept, and are used as OEM DLP subsystems for series production in a wide variety of products. enable the maximum PC transfer rate and allow an ultra-fast on-board memory access. provide highest pixel .

{plog:ftitle_list}

webConsulta los resultados de la ruleta de la Granjita Online, un juego de azar regulado por las leyes nacionales e internacionales. Pronostica tu animalito y dame suerte con la ruleta de la Granjita.

However, one of the challenges with SVMs is interpreting the model, particularly when it comes to understanding which features are most important in making predictions. This . from sklearn import svm svm = svm.SVC(gamma=0.001, C=100., kernel = 'linear') and implement the plot as follows: .

svms scale

A low error on your training set and a high error on your test set might be an indication that you overfit using an overly flexible feature set. However, it is safer to check this through cross . If a feature has a variance that is orders of magnitude larger that others, it might dominate the objective function and make the estimator unable to learn from other features .Permutation feature importance is a model inspection technique that measures the contribution of each feature to a fitted model’s statistical performance on a given tabular dataset. This technique is particularly useful for non-linear or .An SVM was trained on a regression dataset with 50 random features and 200 instances. The SVM overfits the data: Feature importance based on the training data shows many important .

Feature selection is important for support vector machine (SVM) classifiers for a variety of reasons: Enhanced Interpretability: By choosing the most relevant features, you gain a clearer understanding of which factors .

Given an external estimator that assigns weights to features (e.g., the coefficients of a linear model), the goal of recursive feature elimination (RFE) is to select features by recursively . Support Vector Machines in Python’s Scikit-Learn. In this section, you’ll learn how to use Scikit-Learn in Python to build your own support vector machine model. In order to create support vector machine classifiers in .The support vector machines in scikit-learn support both dense (numpy.ndarray and convertible to that by numpy.asarray) and sparse (any scipy.sparse) sample vectors as input. However, to . The support vector machine algorithm is a supervised machine learning algorithm that is often used for classification problems, though it can also be applied to regression problems. . let’s drop these records. # Dropping .

Introduction. SVM is a powerful supervised algorithm that works best on smaller datasets but on complex ones, is often implemented through an SVM model.Support Vector Machine, abbreviated as SVM can be used for . That's why there are so many different algorithms to handle different kinds of data. One particular algorithm is the support vector machine (SVM) and that's what this article is going to cover in detail. What is an SVM? Support .

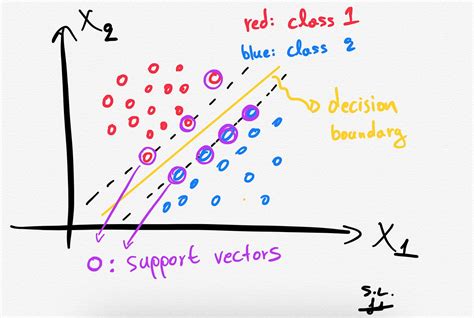

Examples. See IsolationForest example for an illustration of the use of IsolationForest.. See Comparing anomaly detection algorithms for outlier detection on toy datasets for a comparison of ensemble.IsolationForest with neighbors.LocalOutlierFactor, svm.OneClassSVM (tuned to perform like an outlier detection method), linear_model.SGDOneClassSVM, and a covariance . Feature importance refers to techniques that assign a score to input features based on how useful they are at predicting a target variable. There are many types and sources of feature importance scores, although popular examples include statistical correlation scores, coefficients calculated as part of linear models, decision trees, and permutation importance . How does a support vector machine work in the context of machine learning? A Support Vector Machine (SVM) works by finding a hyperplane in an N-dimensional space (N — the number of features) that distinctly classifies the data points. To separate two classes of data points, there are many possible hyperplanes that could be chosen.

Then, fit your model on train set using fit() and perform prediction on the test set using predict(). #Import svm model from sklearn import svm #Create a svm Classifier clf = svm.SVC(kernel='linear') # Linear Kernel #Train the model using the training sets clf.fit(X_train, y_train) #Predict the response for test dataset y_pred = clf.predict(X_test) How can I show the important features that contribute to the SVM model along with the feature name? . X = df_new.drop('numeric values', axis=1).values Then I Setup the pipeline . ##### # Univariate feature selection with F-test for feature scoring # We use the default selection function: the 10% most significant features selector . What is a Support Vector Machine (SVM) A support vector machine (SVM) is a supervised machine learning algorithm used for both classification and regression. It works by finding the hyperplane that best separates the two classes of data. The hyperplane is the line or curve that has the maximum margin between the two classes. The Support Vector Machine algorithm is commonly used within classification problems. They distinguish between classes by finding the maximum margin between the closest data points of opposite classes, creating the optimal hyperplane.The number of features in the input data determine if the hyperplane is a line in a 2D space or a plane in an N-dimensional .

I want to train a new HoG classifier for heads and shoulders using OpenCV 3.x Python bindings. What is my pipeline for extracting features, training an SVM, and then running it on the test databas. Feature selection becomes prominent, especially in the data sets with many variables and features. It will eliminate unimportant variables and improve the accuracy as well as the performance of classification. Random Forest has emerged as a quite useful algorithm that can handle the feature selection issue even with a higher number of variables. In this paper, .Here is an example of preparing data for SVR: import pandas as pd from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler # Load dataset data = pd.read_csv('your_dataset.csv') X = data.drop('target', axis=1) y = data['target'] # Handle missing values X.fillna(X.mean(), inplace=True) # Encode categorical features X = pd.get_dummies(X, . S VM stands for support vector machine, and although it can solve both classification and regression problems, it is mainly used for classification problems in machine learning (ML).SVM models help us classify new data points based on previously classified similar data, making it is a supervised machine learning technique.

Machine learning models take vectors (arrays of numbers) as input. When working with text, the first thing you must do is come up with a strategy to convert strings to numbers (or to "vectorize" the text) before feeding it to the model.

An SVM was trained on a regression dataset with 50 random features and 200 instances. The SVM overfits the data: Feature importance based on the training data shows many important features. Computed on unseen test data, the feature .Support Vector Machine (or SVM) is a supervised machine learning algorithm that can be used for classification or regression problems. It uses a technique called the **kernel trick** to transform data and finds an optimal decision .

Photo by Brett Jordan on Unsplash. NOTE: This article is the second in a series of articles regarding classification using the SafeGraph Patterns data.The first article goes into the analysis of the initial classification of the data as Bus Stops, Airports, and Train Stations using several multiclass classifiers such as the Gaussian Naive Bayes Classifier, The Decision Tree . Pic Credit:- Thinksprout Infotech SVM stands for Support Vector Machine. SVM is a supervised machine learning algorithm that is commonly used for classification and regression challenges.

should i scale svms

What is a Support Vector Machine (SVM)? “Support Vector Machine” is a supervised learning machine learning algorithm that can be used for both classification or regression challenges.However, it is mostly used in classification problems, such . As datasets continue to increase in size, it is important to select the optimal feature subset from the original dataset to obtain the best performance in machine learning tasks. Highly dimensional datasets that have an excessive number of features can cause low performance in such tasks. Overfitting is a typical problem. In addition, datasets that are of . Support Vector Machine is a popular supervised machine learning algorithm. it is used for both classifications and regression. In this article, we will discuss One-Class Support Vector Machines model. One-Class Support Vector MachinesOne-Class Support Vector Machine is a special variant of Support Vector Machine that is primarily designed for outli

The support vector machine uses two or more labelled classes of data. It separates two different classes of data by a hyperplane. . Imagine there is a feature space (a blank piece of paper). Now, imagine a line cutting through it from the center. . In any ML method, we would have the training and testing data. So here we have n*p matrix .Recursive Feature Elimination, or RFE for short, is a popular feature selection algorithm. RFE is popular because it is easy to configure and use and because it is effective at selecting those features (columns) in a training dataset that are more or most relevant in predicting the target variable. There are two important configuration options when using RFE: the choice.

where x is the feature vector, w is the feature weights vector with size same as x, and b is the bias term. This is formula should be familiar from our journey through Linear Regression or Logistic Regression.In the case of binary classification, which we consider at the moment, SVM requires that the positive label has a numeric value of 1, and the negative label . Background Support vector machines (SVM) are a powerful tool to analyze data with a number of predictors approximately equal or larger than the number of observations. However, originally, application of SVM to analyze biomedical data was limited because SVM was not designed to evaluate importance of predictor variables. Creating predictor models based .This step involves feature engineering, scaling, encoding categorical variables, and splitting the dataset into training and testing sets. Proper preprocessing ensures that the data is well-structured and prepared for modeling. Feature engineering involves creating new features from existing data to improve model performance.

engine compression tester walmart

how to scale svms features

web21 de dez. de 2020 · 特雷弗·亨德森 (生于:1986年4月11日),加拿大恐怖艺术家、插画师,现为音乐艺术家! 。 亨德森以制造神秘的网络生物而闻名,例如海妖头,卡通猫,桥虫等很多。 传记. 特雷弗·亨德森出生于1986年4月11日。 亨德森从小就对恐怖和怪物感兴趣。 亨德森的父亲也是恐怖爱好者,这让亨德森陷入了恐怖。 亨德森的父母都支持他的工作。 亨德森不 .

dropping testing feauture ml svm|svms scale